Validating the Model Deployment

In this section, we’ll validate and test our deployed RoShambo game model using the Jupyter Hub environment in OpenShift AI.

1. Testing the Model Deployment

Go back to the tab where you opened the Jupyter Notebook (in case you closed go to Data Science Projects, wksp-{user}, workbenches and open again)

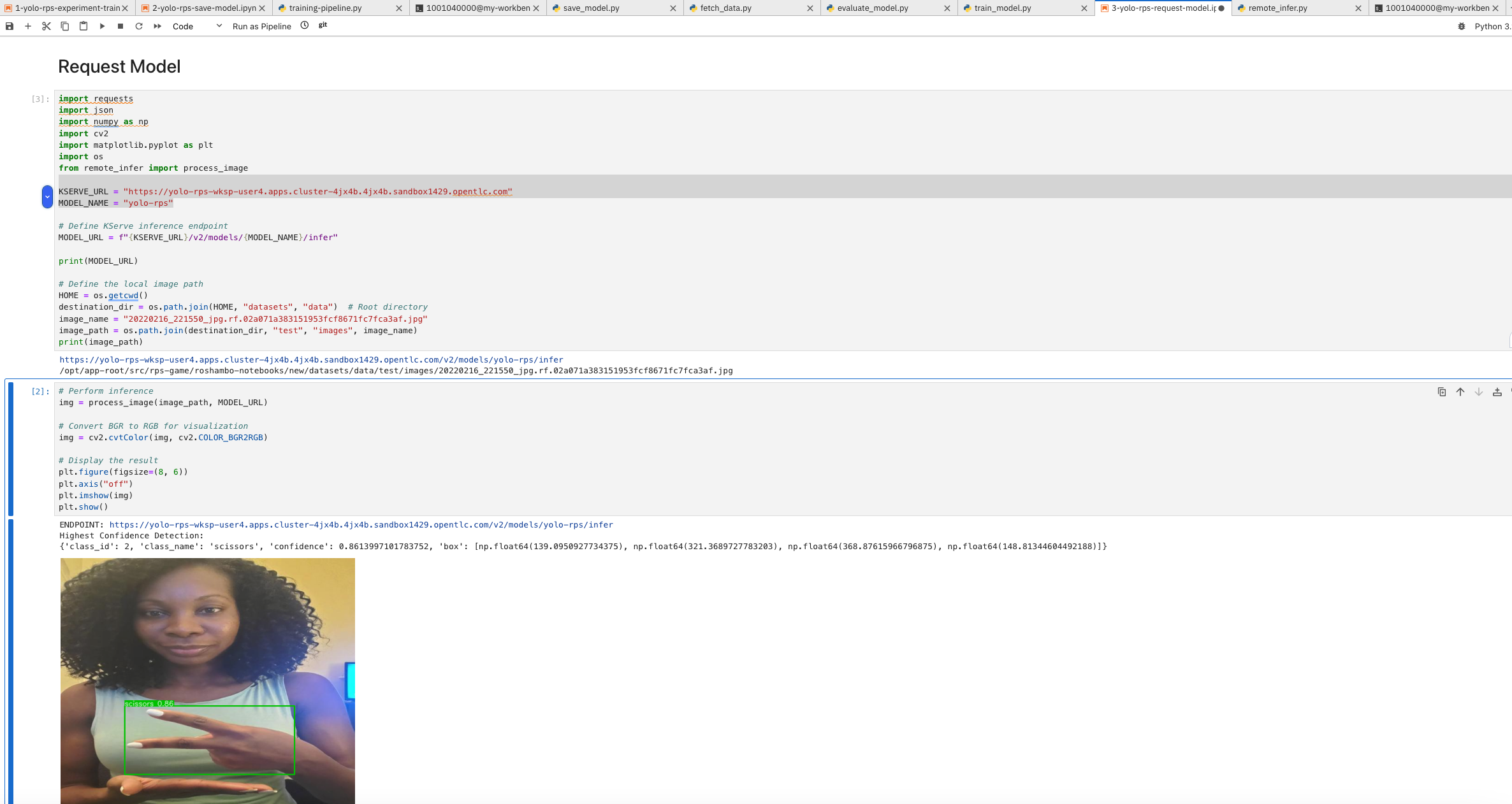

At the jupyter notebook, open the 3-yolo-rps-request-model.ipynb and modify the following lines:

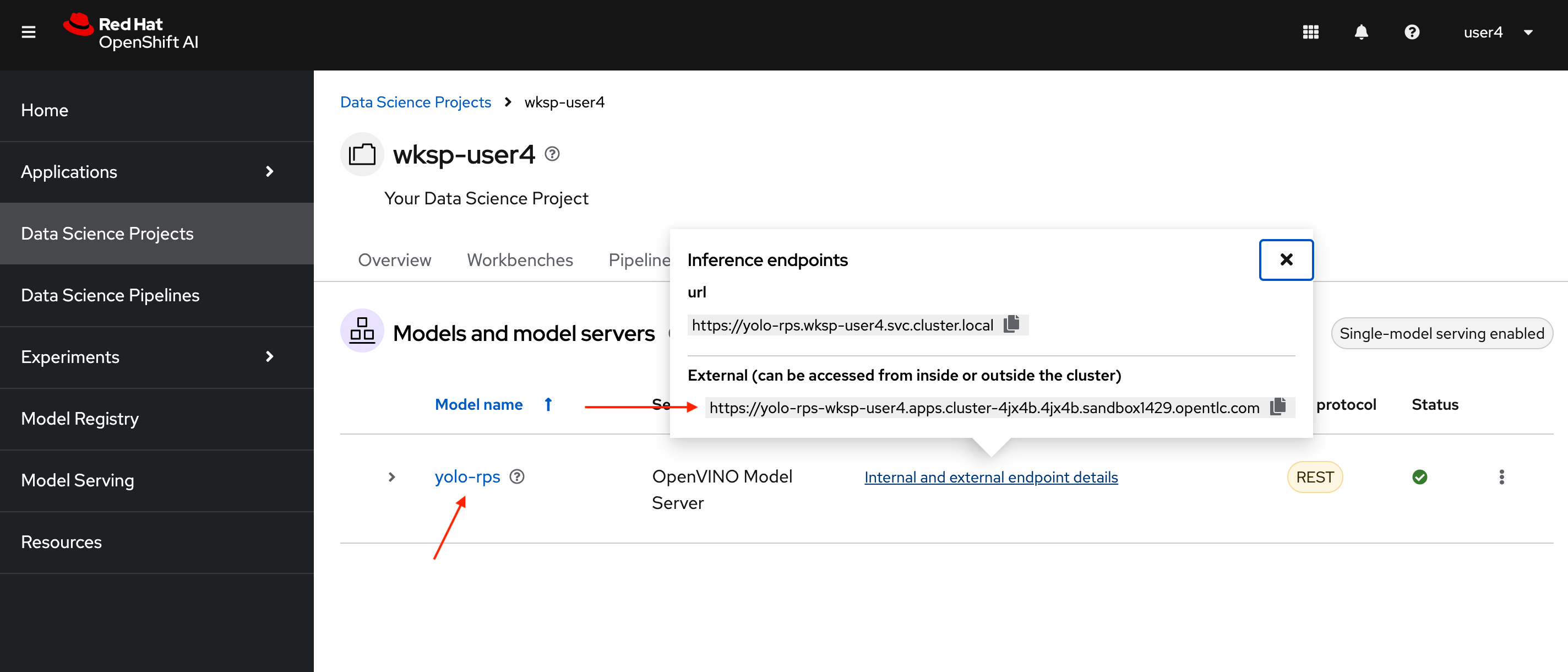

KSERVE_URL = "https://yolo-rps-wksp-{user}.{openshift_cluster_ingress_domain}"

MODEL_NAME = "yolo-rps"| You can find the inference API URL and the model name in the Model Serving section, as explained earlier. |

Let’s run the notebook to test the deployed model. Click the Play button in the top toolbar to execute the cells: first to install the required libraries, and then to set variables such as the inference URL and the model/input names. Next, load the image and send it using an HTTP call to the model. Finally, the notebook will display the result of the image, showing the detected hand shape. In the first version, this returned an error:

| The notebook loads the hand gesture images from a specified directory and preprocesses them to match the input requirements of the model. This involves resizing the images to the expected dimensions and normalizing the pixel values. |

Yay! It works much better.

2. Next Steps

After running the notebook, you should see the model’s predicted hand gesture for the input image. If the model correctly identifies the gestures, the deployment is successful.

In the next section, we’ll deploy the game application using Argo CD and configure it to communicate with the model server for real-time hand gesture classification.