Welcome to the Tutorial!

Welcome to the Tutorial: Rock, Paper, Scissors! Build an AI-powered Interactive Game With Argo CD and Kubeflow. We’re glad to have you here with us at this event!

1. About this lab

This tutorial provides you with a shared OpenShift cluster and a series of exercises that teach you how to use Kubeflow and Argo CD for an AI-powered interactive game called RoShambo. You will learn how to use Kubeflow to automate model construction through data science pipelines and deploy models via the model server. You will also learn how to use Argo CDs to handle the deployment of models and applications using GitOps principles.

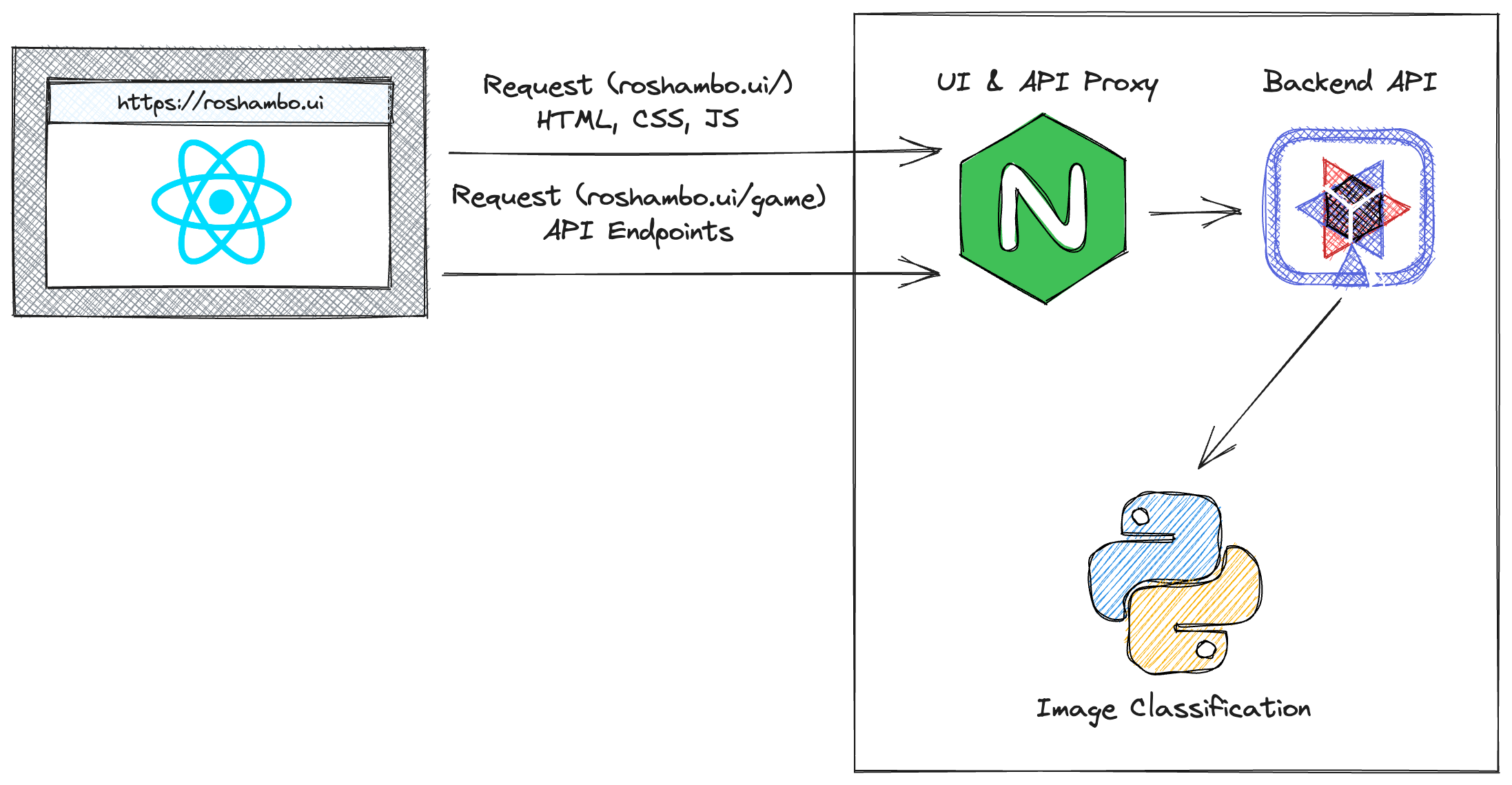

We’ll be deploying a multi-component application which includes a frontend built with React, a backend using Quarkus, and an AI model built and trained with PyTorch.

2. Introduction

The demo application in this workshop is an interactive game called RoShambo, which allows users to play the classic game of Rock, Scissors, Paper against an AI-powered opponent. You will work with two main components: the AI model and the game application.

By the end of this lab, you will have a better understanding of how to deploy AI models using Kubeflow, automate model construction with data science pipelines, how to serve models with KServe and leverage Argo CD for deploying AI worloads. The workshop includes the following steps:

-

Work with an existing OpenShift cluster and access Kubeflow

-

Create a Data Science project and set up a workbench

-

Work within Jupyter Hub to work with an existing Data Science project and explore the various notebooks

-

Run a data science pipeline to train the AI model

-

Create a model server with OpenVINO and KServe and test the AI model

-

Deploy the AI proxy and game application using Argo CD

-

Interact with the game, both with and without camera input

-

Enable the multiplayer feature and play together with other workshop participants

-

Explore MLOps automation with Kubeflow, Pipelines, KServe and GitOps.

3. What is Kubeflow?

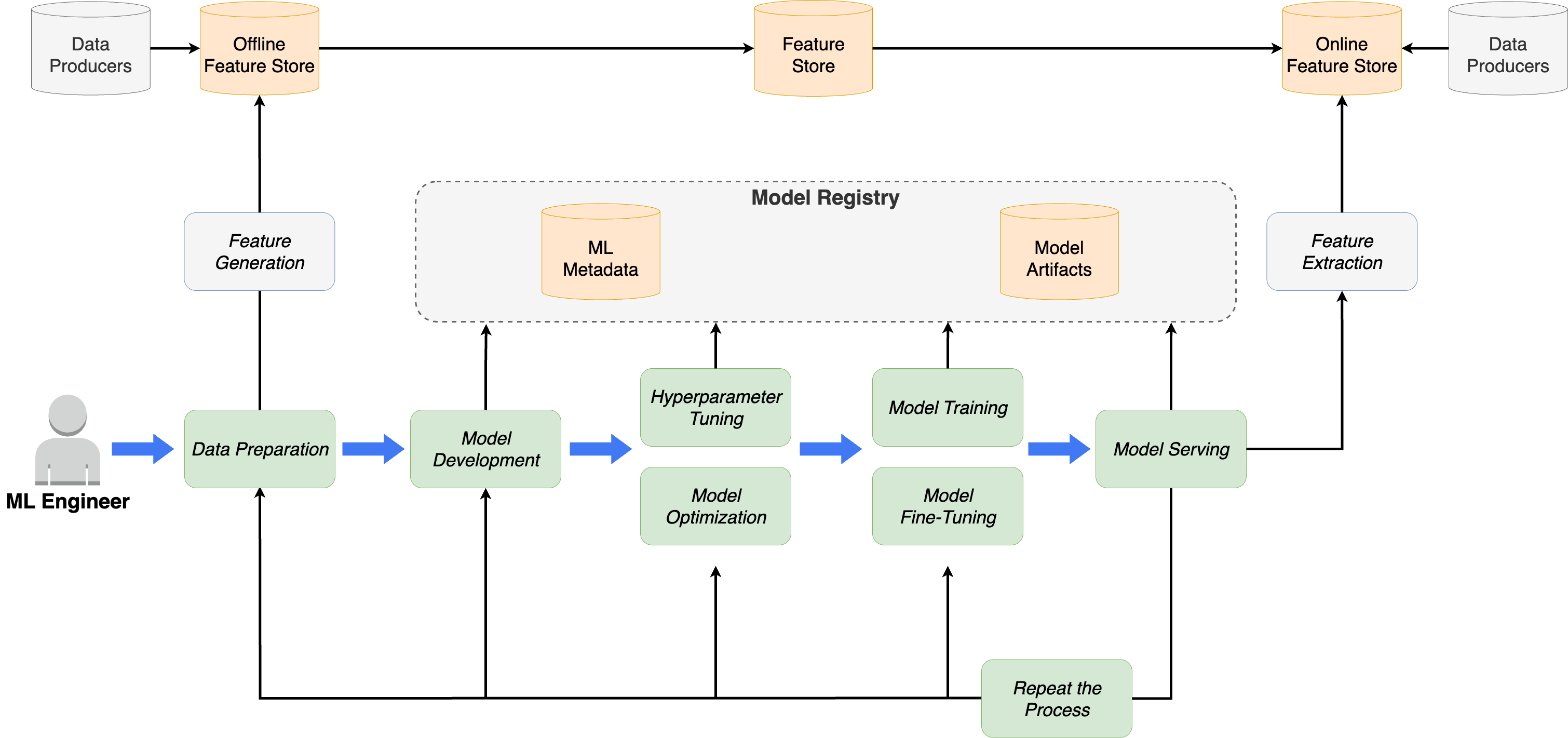

Kubeflow is essentially an open-source machine learning (ML) platform dedicated to simplifying the deployment and management of ML workflows on Kubernetes. Here’s a breakdown of its key features:

-

Kubeflow Pipelines enable the creation and management of portable and scalable ML workflows. These workflows are defined as pipelines, where each step is containerized.

-

Katib handles hyperparameter tuning, automating the process of finding the optimal parameters for ML models.

-

KFServing simplifies the deployment of trained ML models for production use, providing a serverless inference platform.

-

Kubeflow allows users to run Jupyter notebooks within Kubernetes clusters, providing a flexible development environment.

-

Kubeflow provides metadata tracking, which is very important for experiment tracking, and model versioning.

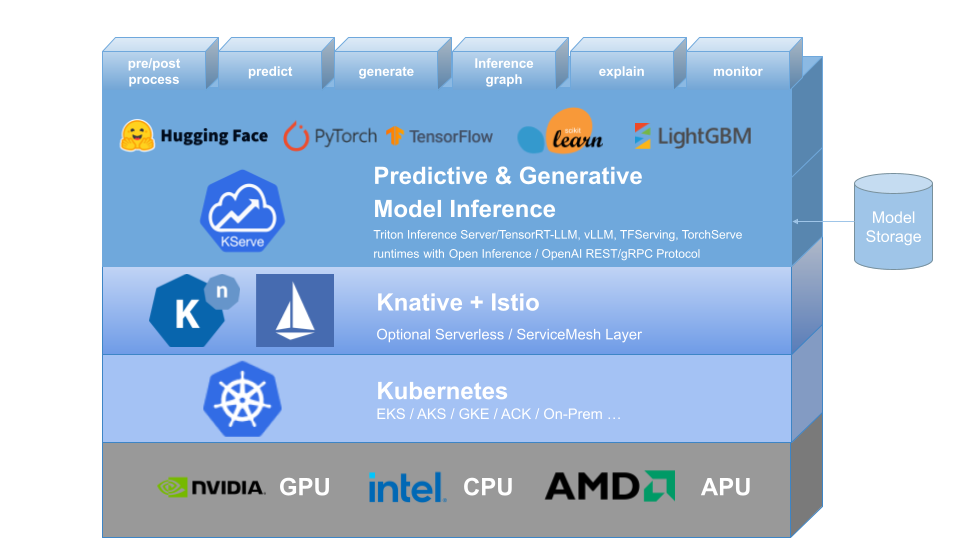

4. What is KServe

KServe is a standard Model Inference Platform on Kubernetes, built for highly scalable use cases.

-

Provides performant, standardized inference protocol across ML frameworks including OpenAI specification for generative models.

-

Support modern serverless inference workload with request based autoscaling including scale-to-zero on CPU and GPU.

-

Provides high scalability, density packing and intelligent routing using ModelMesh.

-

Advanced deployments for canary rollout, pipeline, ensembles with InferenceGraph

5. What is OpenVINO Model Server

OpenVINO Model Server (OVMS) is a high-performance system designed to serve machine learning models optimized by the OpenVINO toolkit. It enables efficient deployment of AI models in production environments, facilitating scalable and reliable inference across various applications.

-

Flexible Deployment: OVMS supports deployment across diverse environments, including Docker containers, bare metal servers, and orchestration platforms like Kubernetes and OpenShift .

-

Standardized APIs: It provides REST and gRPC application programming interfaces, allowing client applications written in any programming language that supports these protocols to perform remote inference.

-

Efficient Resource Utilization: OVMS is optimized for both horizontal and vertical scaling, ensuring efficient use of computational resources to meet varying workload demands.

-

Secure Model Management: Models are stored either locally or in remote object storage services, and are not directly exposed to client applications, enhancing security and access control.

-

Simplified Client Integration: Clients require minimal updates since the server abstracts the model details, providing a stable interface for inference requests.

-

Microservices Architecture Compatibility: OVMS is ideal for microservices-based applications, facilitating seamless integration into cloud environments and container orchestration systems.

-

Dynamic Model Management: When serving multiple models, OVMS allows for runtime configuration changes without needing to restart the server, enabling the addition, deletion, or updating of models on-the-fly.

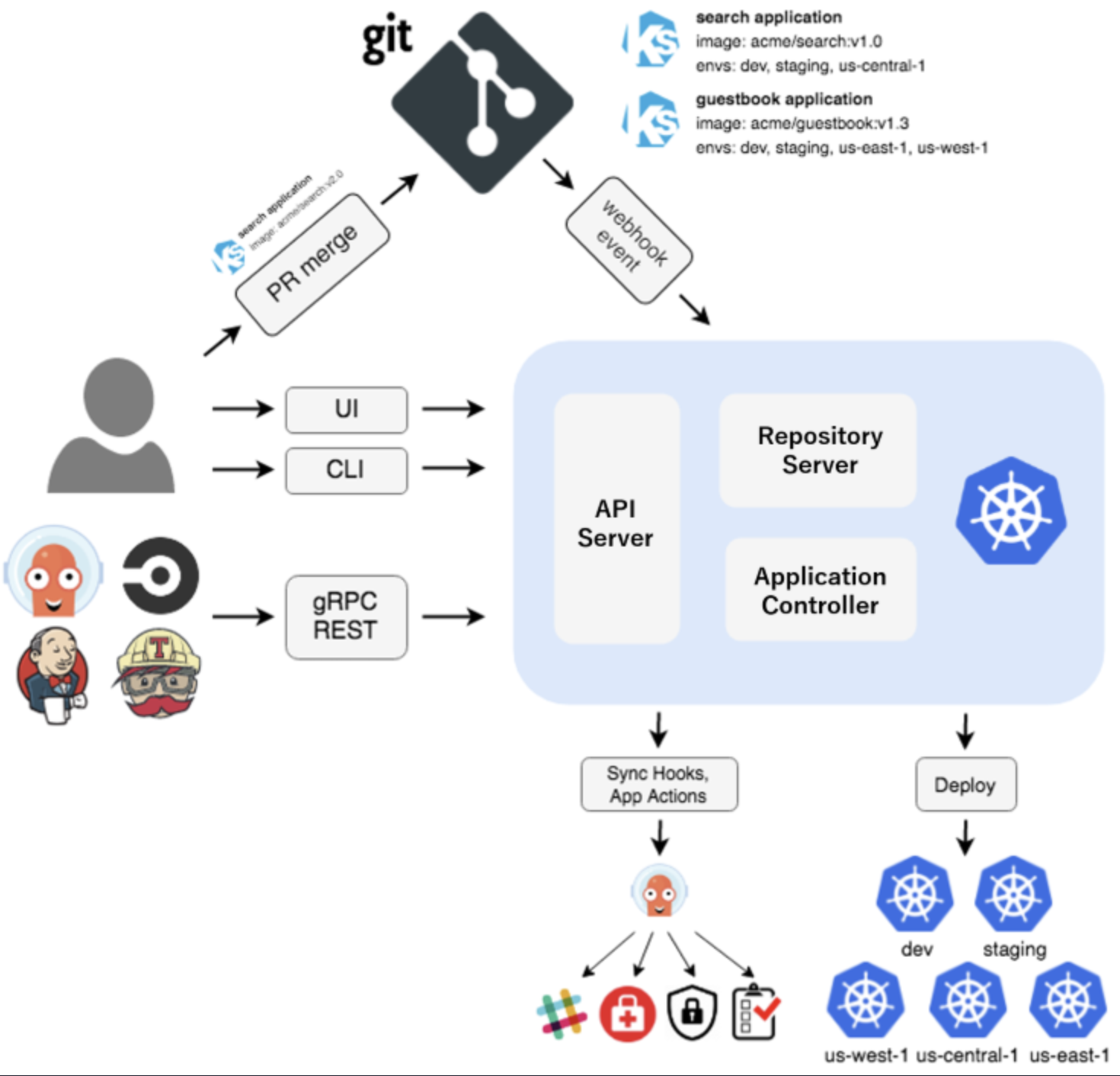

6. What is Argo CD?

Argo CD is a powerful, declarative, GitOps continuous delivery tool for Kubernetes. In simpler terms, it helps automate the deployment of applications to Kubernetes clusters. Here’s a breakdown of its key functionalities:

-

Automates the deployment of applications to Kubernetes clusters.

-

Automatically synchronizes the application’s live state with the desired state in Git.

-

Provides a user-friendly web UI for visualizing and managing application deployments.

-

Offers a CLI for interacting with Argo CD.

-

Supports various configuration formats, including YAML, Helm charts, and Kustomize.