Istio for Promoting Services (Feature Graduation)

|

Before Start

You should have NO virtualservice, destinationrule, gateway or policy (in |

In this chapter, we are going to see how to use Istio to promote a service to a more wide amount of users depending on their configuration.

What is a Service Promotion?

The idea behind service promotion is that you are able to promote your different versions of a service so it can be reached gradually by your public traffic.

What you define is different levels where your service is promoted, and in each of this level, the service is meant to be more stable than in the previous one. You can think about graduating services to classes of users.

There are several ways of doing it, but one way we are using in OpenShift IO is by allowing the user to choose if he wants to try the latest deployed version of the application (experimental level) or a stable version of the application (stable level).

This means that in the public cluster you have more than one version of the same service deployed, and the public traffic is able to reach it as well.

So let’s see how to implement this approach using Istio.

Create recommendation:v3

|

You need to be sure that you do not have a recommendation:v3 deployed in the cluster and that recommendation code base is correct. To check if recommendation source code is correct check that recommendation/java/vertx/src/main/java/com/redhat/developer/demos/recommendation/RecommendationVerticle.java

You might have a recommendation:v3 deployed because of you did Egress Section ad not cleaned up the resources. If it is the case you can follow the Egress Clean Up Section. If it is not the case then run: |

We can experiment with Istio controlling traffic by making a change to RecommendationVerticle.java like the following and creating a "v3" docker image.

private static final String RESPONSE_STRING_FORMAT = "recommendation v3 from '%s': %d\n";The "v3" tag during the Docker build is significant.

There is also a second deployment.yml file to label things correctly

Docker build (if you have access to Docker daemon)

cd recommendation/java/vertx

mvn clean package

docker build -t example/recommendation:v3 .

docker images | grep recommendation

example/recommendation v3 c31e399a9628 1 seconds ago 438MB

example/recommendation v2 c31e399a9628 5 seconds ago 438MB

example/recommendation v1 f072978d9cf6 8 minutes ago 438MB| We have a 3rd Deployment to manage the v3 version of recommendation. |

oc apply -f <(istioctl kube-inject -f ../../kubernetes/Deployment-v3.yml) -n tutorial

oc get pods -w -n tutorial

or

kubectl apply -f <(istioctl kube-inject -f ../../kubernetes/Deployment-v3.yml) -n tutorial

kubectl get pods -w -n tutorialOpenShift S2I strategy (if you DON’T have access to Docker daemon)

mvn clean package -f recommendation/java/vertx

oc new-app -l app=recommendation,version=v3 --name=recommendation-v3 --context-dir=recommendation/java/vertx -e JAEGER_SERVICE_NAME=recommendation JAEGER_ENDPOINT=http://jaeger-collector.istio-system.svc:14268/api/traces JAEGER_PROPAGATION=b3 JAEGER_SAMPLER_TYPE=const JAEGER_SAMPLER_PARAM=1 JAVA_OPTIONS='-Xms128m -Xmx256m -Djava.net.preferIPv4Stack=true' fabric8/s2i-java~https://github.com/redhat-scholars/istio-tutorial -o yaml > recommendation-v3.yml

oc apply -f <(istioctl kube-inject -f recommendation-v3.yml) -n tutorial

oc cancel-build bc/recommendation-v3 -n tutorial

oc delete svc/recommendation-v3 -n tutorial

oc start-build recommendation-v3 --from-dir=. --follow -n tutorialWait for v3 to be deployed

Wait for those pods to show "2/2", the istio-proxy/envoy sidecar is part of that pod.

NAME READY STATUS RESTARTS AGE

customer-3600192384-fpljb 2/2 Running 0 17m

preference-243057078-8c5hz 2/2 Running 0 15m

recommendation-v1-60483540-9snd9 2/2 Running 0 12m

recommendation-v2-2815683430-vpx4p 2/2 Running 0 15s

recommendation-v3-9834632434-urd34 2/2 Running 0 15sand test the customer endpoint until you can see that v3 has been reached.

curl customer-tutorial.$(minishift ip).nip.ioFeature Graduation

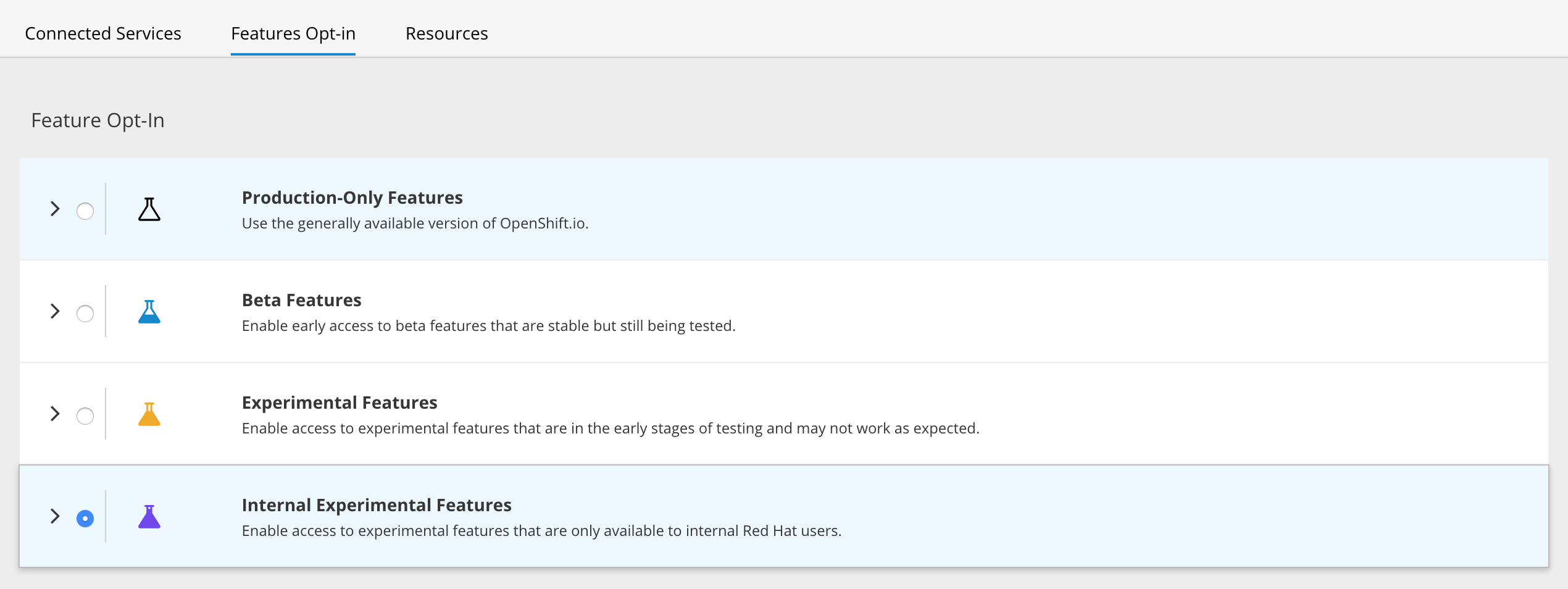

With 3 versions of service recommendation deployed, now we can define 3 different level of access. For example experimental, beta and production.

With Istio, we can use routing for sending traffic depending on users configuration.

istioctl create -f istiofiles/destination-rule-recommendation-v1-v2-v3.yml -n tutorial

istioctl create -f istiofiles/virtual-service-promotion-v1-v2-v3.yml -n tutorialNow, we need to set a header named user-preference with some specific value:

- For version 3

-

123(experimental) - For version 2

-

12(beta) - For version 1

-

empty (production)

The reason behind using these approach is the next one. In this example, we have three levels experimental, beta and production, and we set a number to each level, 3 is the most unstable version while one is the most stable version.

So, for example, a user that sets its preferences to experimental it means that he needs to reach experimental or if no eperimental then _beta or by default production.

This effectively means setting that he wants to reach all levels 123.

And the same applies to any other level defined by the user.

curl -H "user-preference: 123" customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v3 from '6953441398-tyw25': 1

curl -H "user-preference: 12" customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v2 from '3490080923-usw67': 2

curl customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v1 from '9834514598-knc40': 10One interesting point here is that if you open istiofiles/virtual-service-promotion-v1-v2-v3.yml you’ll see that the header is set to baggage-user-preference meanwhile in curl it is set to user-preference.

The reason is that in customer service (CustomerController.java), io.opentracing.Tracer class is used to set as baggage item the user-preference header.

In this way, the header is populated across all services without having to copy it manually in each service, since by default headers are not populated automatically.

Now let’s make a promotion of the versions, so version 1 is not reachable anymore, version 2 is the new production and version 3 is the new beta.

istioctl replace -f istiofiles/virtual-service-promoted-v3.yml -n tutorialcurl -H "user-preference: 123" customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v3 from '6953441398-tyw25': 2

curl -H "user-preference: 12" customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v3 from '6953441398-tyw25': 3

curl customer-tutorial.$(minishift ip).nip.io

customer => preference => recommendation v2 from '3490080923-usw67': 3Notice that now the request with preference set to experimental falls back to beta as there is no experimental version.

So versions of a service are promoted without having to redeploy anything nor changing your code.