OpenShift Streams for Apache Kafka and Kafka Streams Workshop

Introduction

|

The OpenShift Streams for Apache Kafka service is no longer available. Consider using AMQ Streams, the technology that powered OpenShift Streams for Apache Kafka instead. This tutorial can no longer be completed. If you’re still interested in learning about Apache Kafka and Kubernetes, then try this tutorial on developers.redhat.com. |

Welcome to the OpenShift Streams for Apache Kafka and Kafka Streams Workshop.

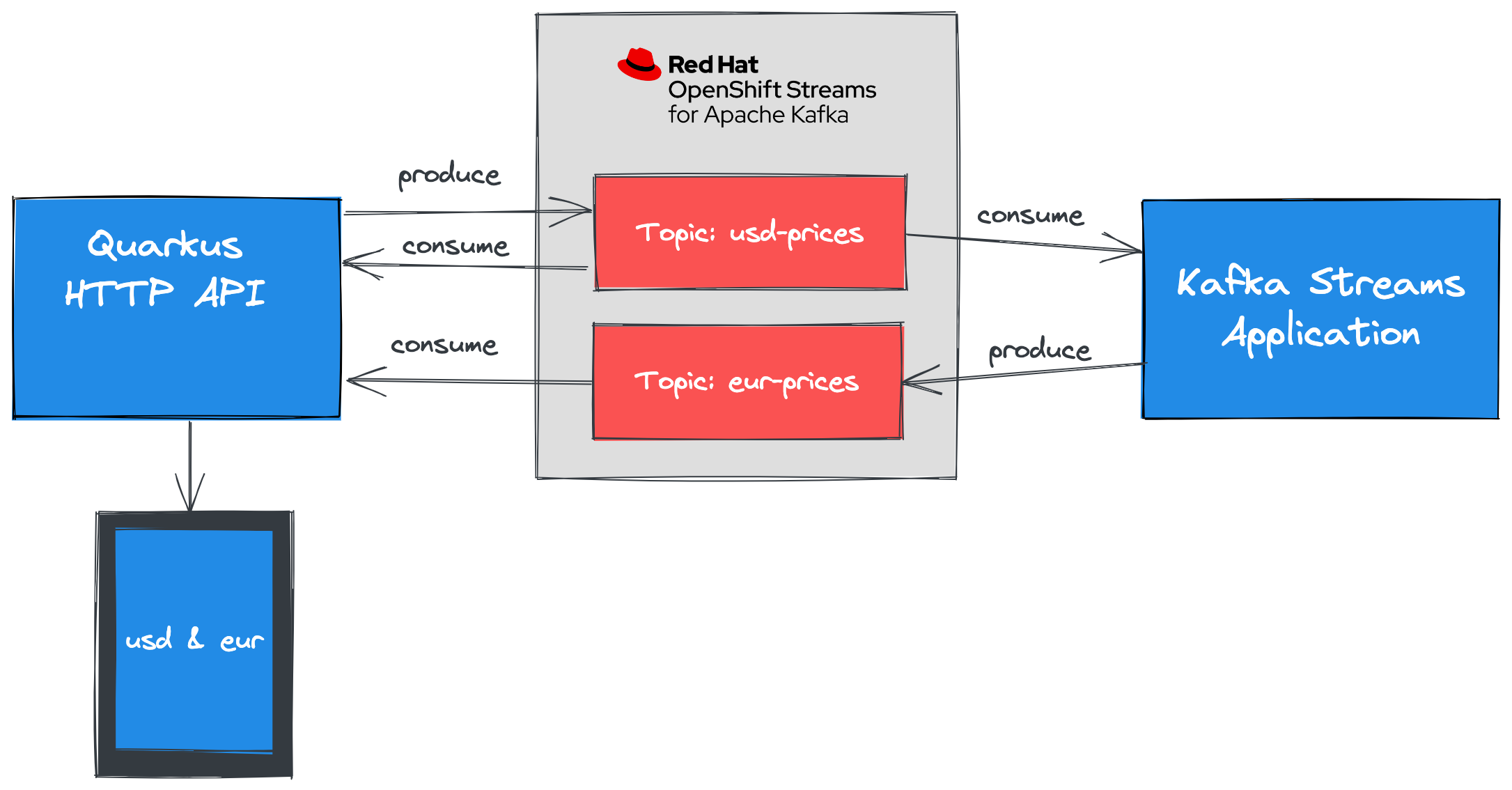

As a developer of applications and services, you can use OpenShift Streams for Apache Kafka to create and set up Kafka instances and connect your applications and services to these instances. Streams for Apache Kafka is a managed cloud service that enables you to add Kafka data-streaming functionality in your applications without having to install, configure, run, and maintain your own Kafka clusters.

Kafka Streams provides a DSL (Domain Specific Language) that enables developers to create scalable data stream processing pipelines with minimal amounts of code. The DSL supports stateless operations such as filtering an existing topic to create a new topic containing records matching specific criteria. Stateful operations are also possible such as producing aggregates and joining records from multiple input streams.

In this guided workshop you will:

-

Create an instance of Red Hat OpenShift Streams for Apache Kafka.

-

Create a service account to connect to the Kafka instance.

-

Deploy a Quarkus application that produces records into the Kafka instance.

-

Develop an application that uses the Kafka Streams DSL to produce records from existing records.

This workshop uses the Developer Sandbox for Red Hat OpenShift, which provides you with a private OpenShift environment in a shared, multi-tenant OpenShift cluster that is pre-configured with a set of developer tools, including the web-based CloudReady Workspaces IDE. This also means you won’t have to install anything on your workstation in order to run this workshop.

Use the menu on the left to get started by provisioning a Kafka instance!