Monitor Application Health

20 MINUTE EXERCISE

In this lab we will learn how to monitor application health using OpenShift health probes and how you can see container resource consumption using metrics.

Understanding Liveness Probes

What happens if you DON’T setup Liveness checks?

By default Pods are designed to be resilient, if a pod dies it will get restarted. Let’s see this happening.

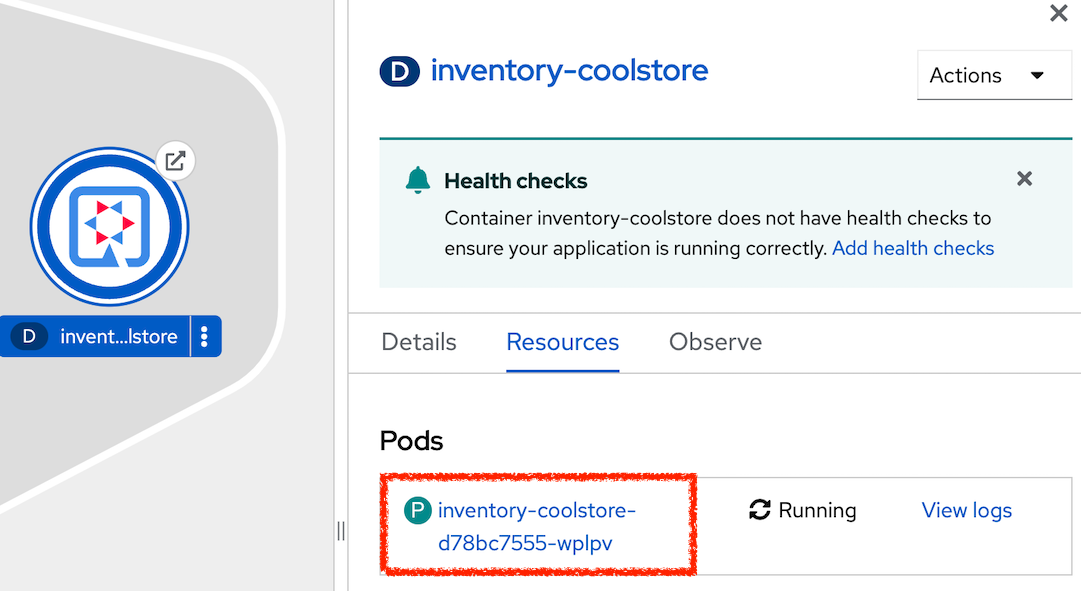

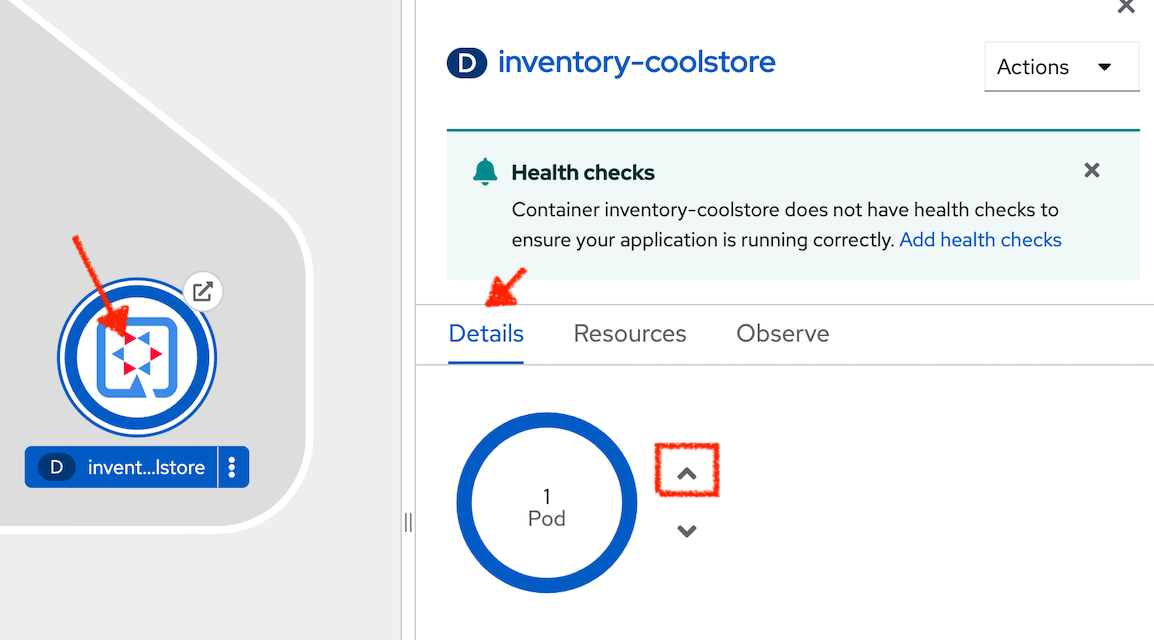

In the OpenShift Web Console, from the Developer view,

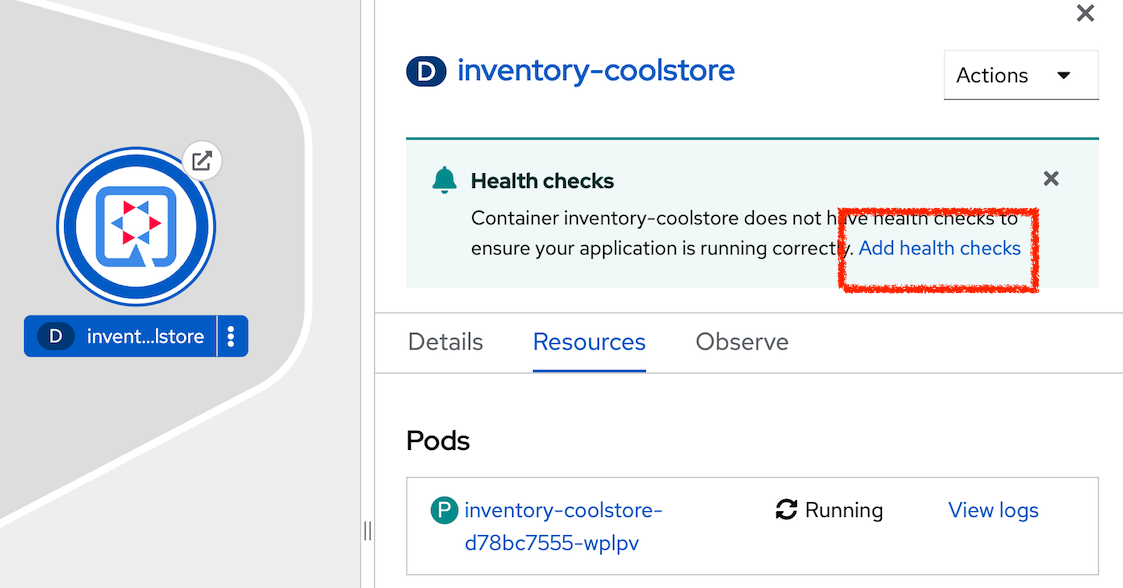

click on 'Topology' → '(D) inventory-coolstore' → 'Resources' → 'P inventory-coolstore-x-xxxxx'

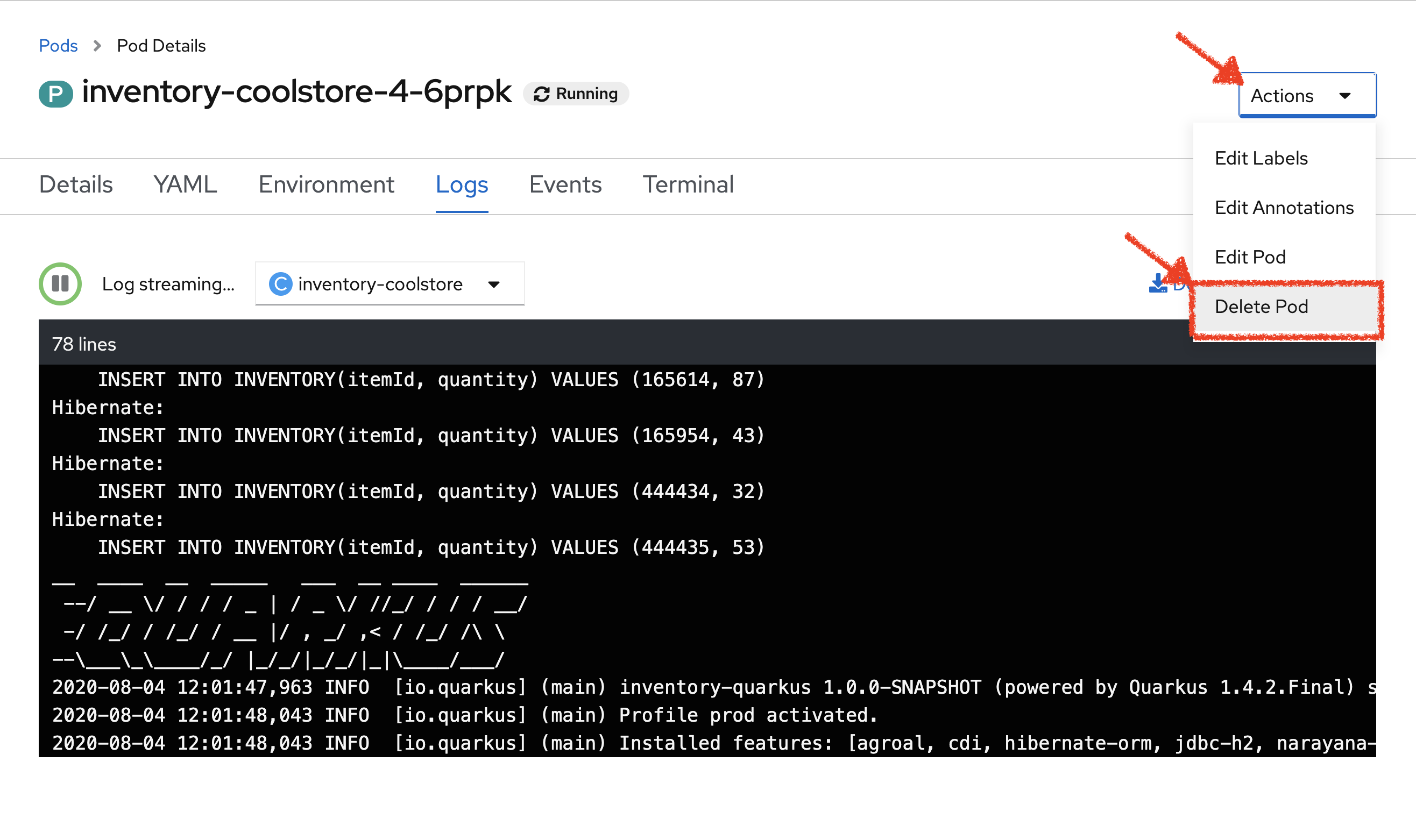

In the OpenShift Web Console, click on 'Actions' → 'Delete Pod' → 'Delete'

A new instance (pod) will be redeployed very quickly. Once deleted try to access your Inventory Service.

However, imagine the Inventory Service is stuck in a state (Stopped listening, Deadlock, etc) where it cannot perform as it should. In this case, the pod will not immeditaely die, it will be in a zombie state.

To make your application more robust and reliable, a Liveness check will be used to check if the container itself has become unresponsive. If the liveness probe fails due to a condition such as a deadlock, the container could automatically restart (based on its restart policy).

Understanding Readiness Probes

What happens if you DON’T setup Readiness checks?

Let’s imagine you have traffic coming into the Inventory Service. We can do that with simple script.

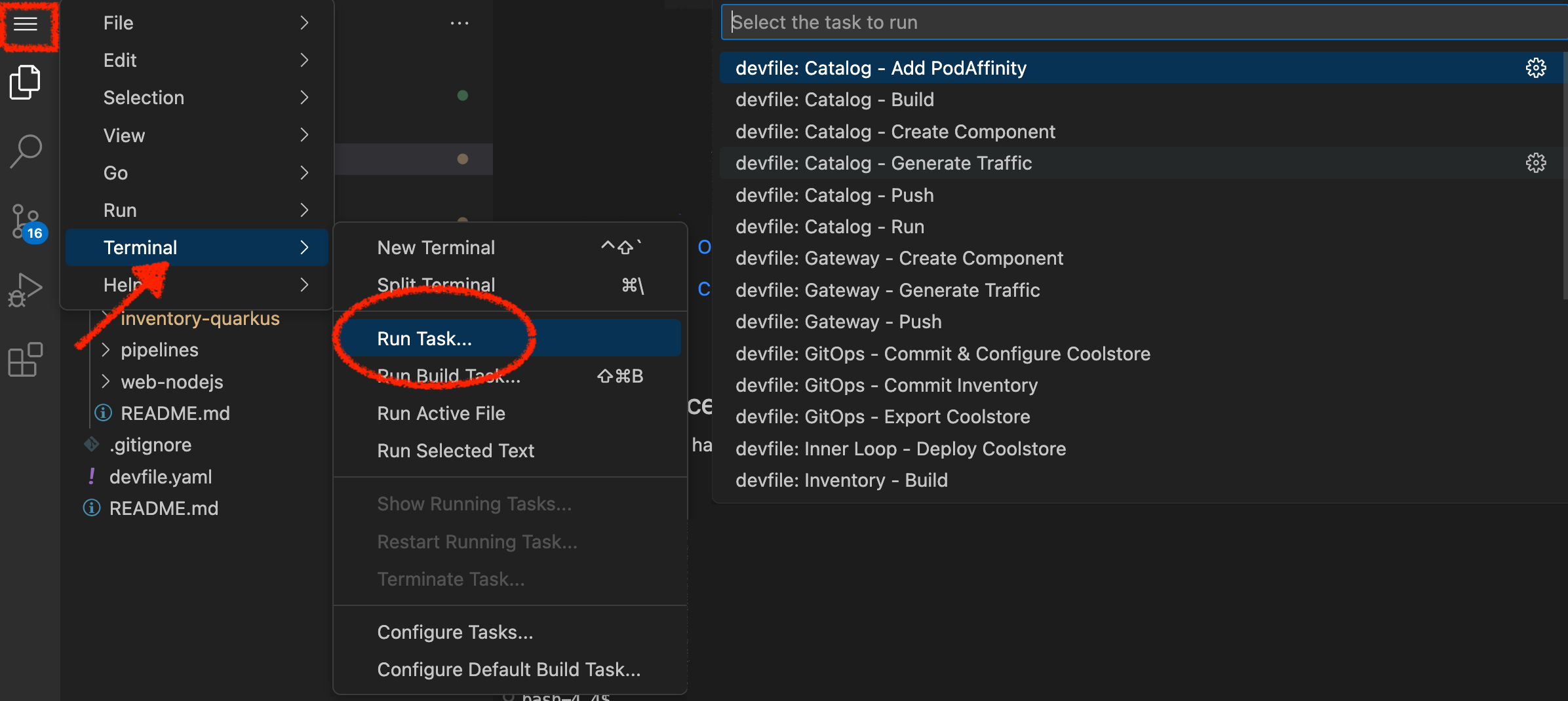

In your Workspace,

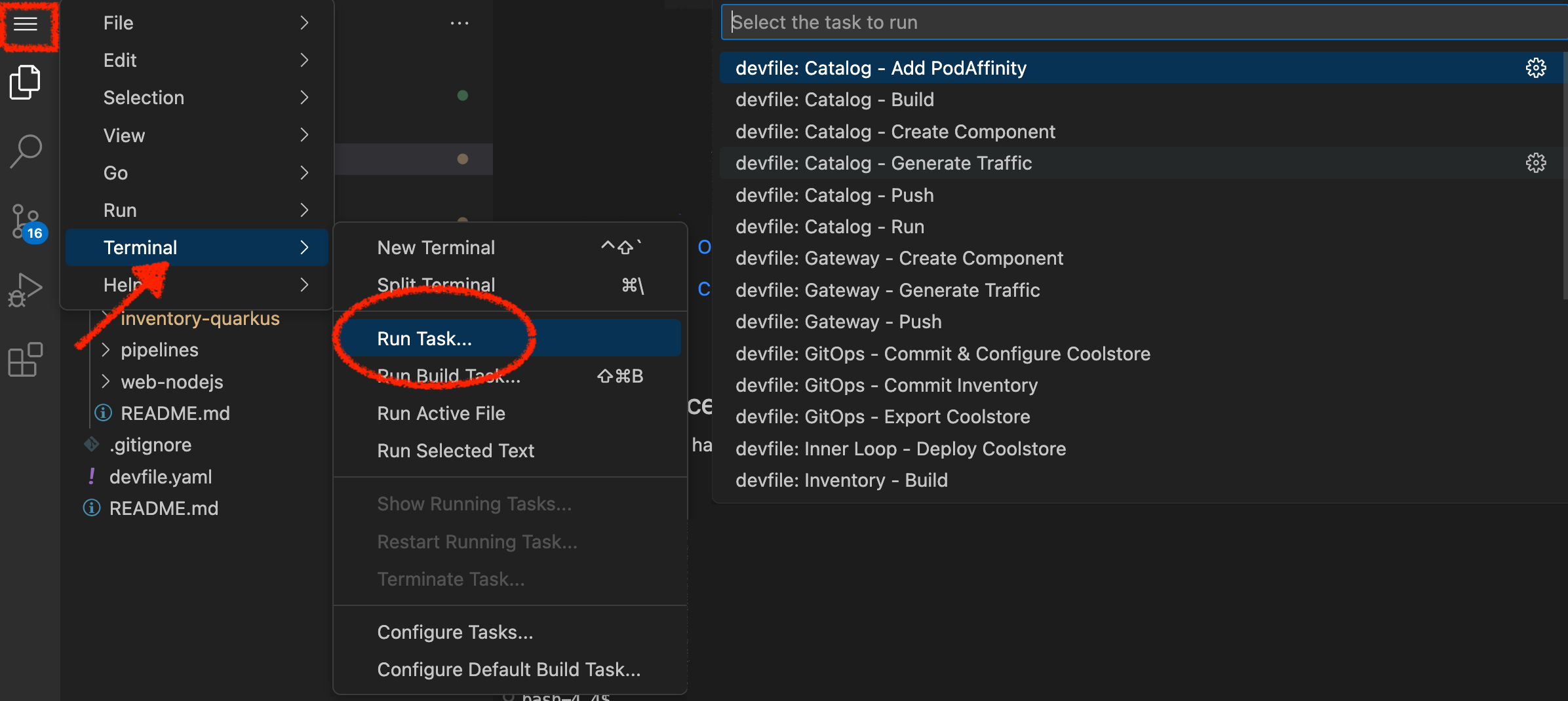

Click on 'Terminal' → 'Run Task…' → 'devfile: Inventory - Generate Traffic'

Execute the following commands in the terminal window

for i in {1..60}

do

if [ $(curl -s -w "%{http_code}" -o /dev/null http://inventory-coolstore.my-project%USER_ID%.svc:8080/api/inventory/329299) == "200" ]

then

MSG="\033[0;32mThe request to Inventory Service has succeeded\033[0m"

else

MSG="\033[0;31mERROR - The request to Inventory Service has failed\033[0m"

fi

echo -e $MSG

sleep 1s

done

To open a terminal window, click on 'Terminal' → 'New Terminal'

|

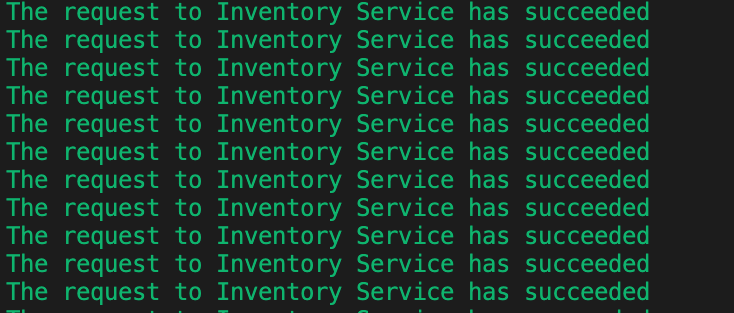

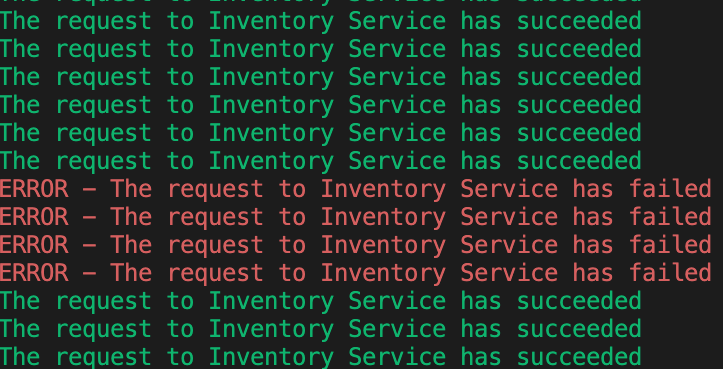

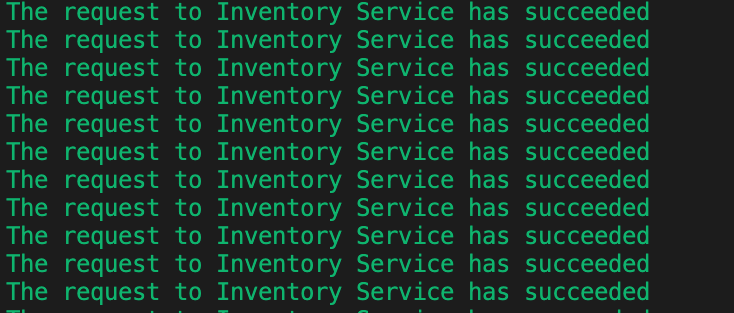

You should have the following output:

Now let’s scale out your Inventory Service to 2 instances.

In the OpenShift Web Console, from the Developer view,

click on 'Topology' → '(D) inventory-coolstore' → 'Details' then click once on the up arrows

on the right side of the pod blue circle.

You should see the 2 instances (pods) running.

Now, switch back to your Workspace and check the output of the 'Inventory Generate Traffic' task.

Why do some requests failed? Because as soon as the container is created, the traffic is sent to this new instance even if the application is not ready. (The Inventory Service takes a few seconds to start up).

In order to prevent this behaviour, a Readiness check is needed. It determines if the container is ready to service requests. If the readiness probe fails, the endpoints controller ensures the container has its IP address removed from the endpoints of all services. A readiness probe can be used to signal to the endpoints controller that even though a container is running, it should not receive any traffic from a proxy.

First, scale down your Inventory Service to 1 instance. In the OpenShift Web Console, from the Developer view,

click on 'Topology' → '(D) inventory-coolstore' → 'Details' then click once on the down arrows

on the right side of the pod blue circle.

Now lets go fix some of these problems.

Configuring Liveness Probes

SmallRye Health is a Quarkus extension which utilizes the MicroProfile Health specification. It allows applications to provide information about their state to external viewers which is typically useful in cloud environments where automated processes must be able to determine whether the application should be discarded or restarted.

Let’s add the needed dependencies to /projects/workshop/labs/inventory-quarkus/pom.xml.

In your Workspace, edit the '/projects/workshop/labs/inventory-quarkus/pom.xml' file:

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-smallrye-health</artifactId>

</dependency>Then, build and push the updated Inventory Service to the OpenShift cluster.

Click on 'Terminal' → 'Run Task…' → 'devfile: Inventory - Push Component'

Execute the following commands in the terminal window

cd /projects/workshop/labs/inventory-quarkus

mvn package -Dquarkus.container-image.build=true -DskipTests -Dquarkus.container-image.group=$(oc project -q) -Dquarkus.kubernetes-client.trust-certs=true

To open a terminal window, click on 'Terminal' → 'New Terminal'

|

Wait till the build is complete then, Delete the Inventory Pod to make it start again with the new code.

oc delete pod -l component=inventory -n my-project%USER_ID%It will take a few seconds to retstart, then verify that the health endpoint works for the Inventory Service using curl

In your Workspace,

execute the following commands in the terminal window - it may take a few attempts while the pod restarts.

curl -w "\n" http://inventory-coolstore.my-project%USER_ID%.svc:8080/q/health

To open a terminal window, click on 'Terminal' → 'New Terminal'

|

You should have the following output:

{

"status": "UP",

"checks": [

{

"name": "Database connection(s) health check",

"status": "UP"

}

]

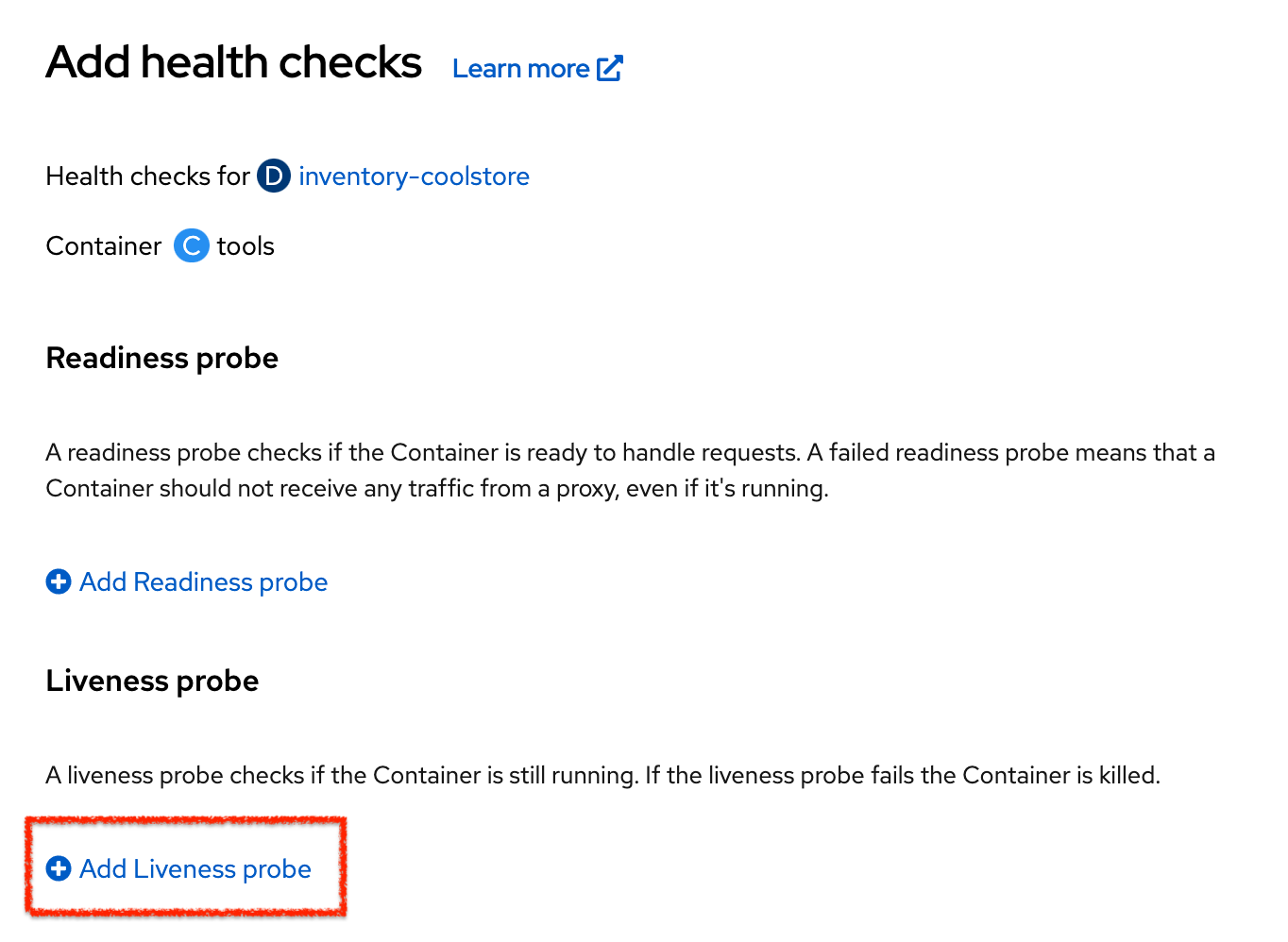

}In the OpenShift Web Console, from the Developer view,

click on 'Topology' → '(D) inventory-coolstore' → 'Add Health Checks'.

Then click on 'Add Liveness Probe'

Enter the following information:

| Parameter | Value |

|---|---|

Type |

HTTP GET |

Use HTTPS |

Unchecked |

HTTP Headers |

Empty |

Path |

/q/health/live |

Port |

8080 |

Failure Threshold |

3 |

Success Threshold |

1 |

Initial Delay |

10 |

Period |

10 |

Timeout |

1 |

Finally click on the check icon. But don’t click Add yet, we have more probes to configure.

Configuring Inventory Readiness Probes

Now repeat the same task for the Inventory service, but this time set the Readiness probes:

| Parameter | Value |

|---|---|

Type |

HTTP GET |

Use HTTPS |

Unchecked |

HTTP Headers |

Empty |

Path |

/q/health/ready |

Port |

8080 |

Failure Threshold |

3 |

Success Threshold |

1 |

Initial Delay |

0 |

Period |

5 |

Timeout |

1 |

Finally click on the check icon and the 'Add' button. OpenShift automates deployments using

deployment triggers

that react to changes to the container image or configuration.

Therefore, as soon as you define the probe, OpenShift automatically redeploys the pod using the new configuration including the liveness probe.

Testing Inventory Readiness Probes

Now let’s test it as you did previously.

Generate traffic to Inventory Service and then, in the OpenShift Web Console,

scale out the Inventory Service to 2 instances (pods)

In your Workspace, check the output of the 'Inventory Generate Traffic' task.

You should not see any errors, this means that you can now scale out your Inventory Service with no downtime.

Now scale down your Inventory Service back to 1 instance.

Catalog Services Probes

Spring Boot Actuator is a sub-project of Spring Boot which adds health and management HTTP endpoints to the application. Enabling Spring Boot Actuator is done via adding org.springframework.boot:spring-boot-starter-actuator dependency to the Maven project dependencies which is already done for the Catalog Service.

Verify that the health endpoint works for the Catalog Service using curl.

In your Workspace, in the terminal window,

execute the following commands:

curl -w "\n" http://catalog-coolstore.my-project%USER_ID%.svc:8080/actuator/healthYou should have the following output:

{"status":"UP"}Liveness and Readiness health checks values have already been set for this service as part of the build and deploying using Eclipse JKube in combination with the Spring Boot actuator.

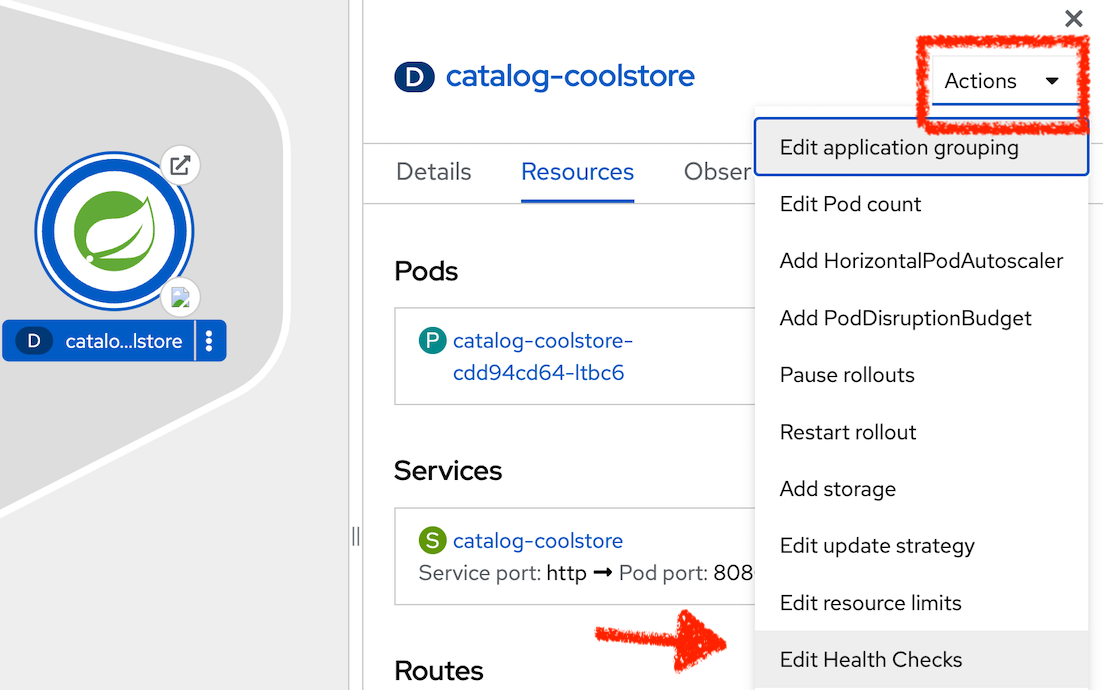

You can check this in the OpenShift Web Console, from the Developer view,

click on 'Topology' → '(D) catalog-coolstore' → 'Actions' → 'Edit Health Checks'.

Understanding Startup Probes

Startup probes are similar to liveness probes but only executed at startup. When a startup probe is configured, the other probes are disabled until it suceeds.

Sometimes, some (legacy) applications might need extra times for their first initialization. In such cases, setting a longer liveness internal might compromise the main benefit of this probe ie providing the fast response to stuck states.

Startup probes are useful to cover this worse case startup time.

Monitoring All Application Healths

Now you understand and know how to configure Readiness, Liveness and Startup probes, let’s confirm your expertise!

Configure the remaining Probes for Inventory and Catalog using the following information:

| Inventory Service | Startup |

|---|---|

Type |

HTTP GET |

Use HTTPS |

Unchecked |

HTTP Headers |

Empty |

Path |

/q/health/live |

Port |

8080 |

Failure Threshold |

3 |

Success Threshold |

1 |

Initial Delay |

0 |

Period |

5 |

Timeout |

1 |

| Catalog Service | Startup |

|---|---|

Type |

HTTP GET |

Use HTTPS |

Unchecked |

HTTP Headers |

Empty |

Path |

/actuator/health |

Port |

8080 |

Failure Threshold |

15 |

Success Threshold |

1 |

Initial Delay |

0 |

Period |

10 |

Timeout |

1 |

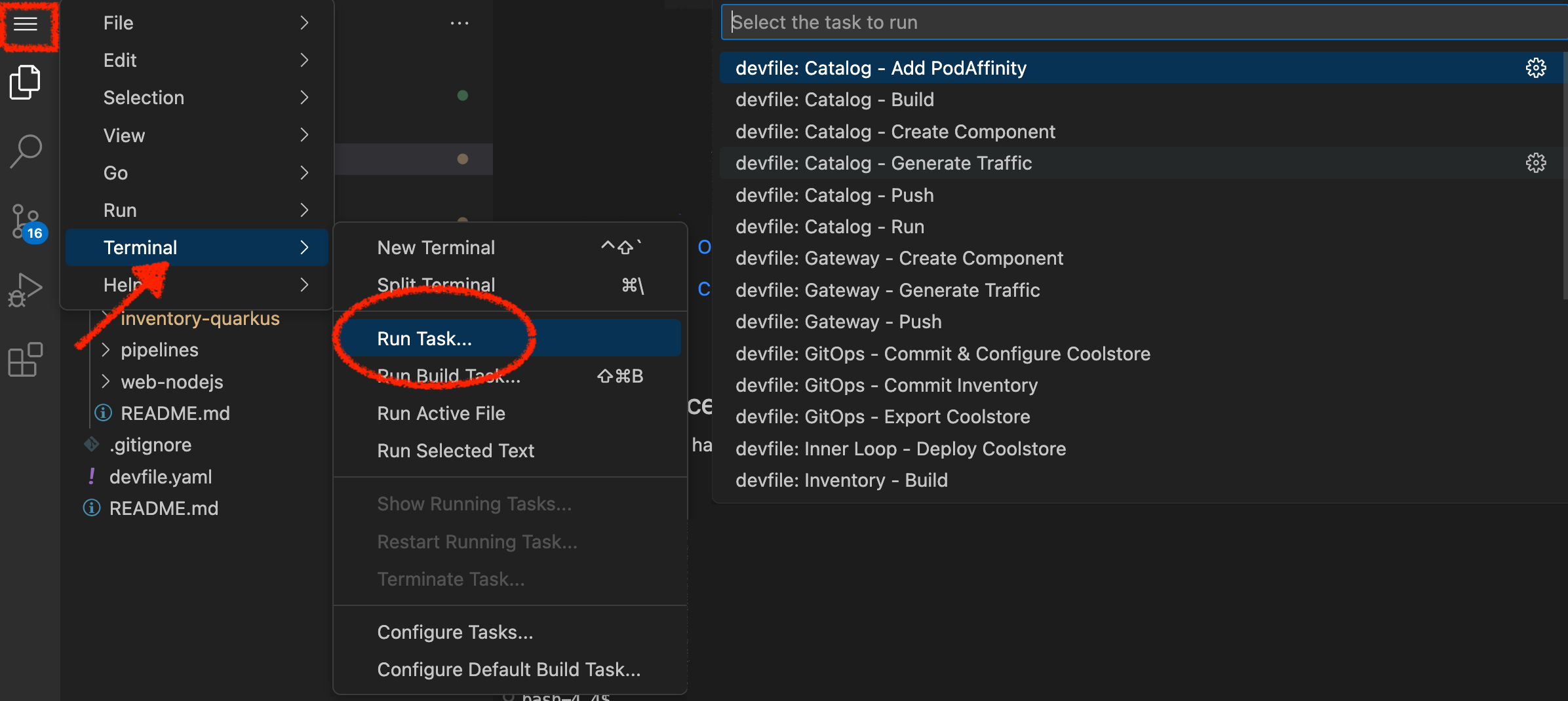

Finally, let’s configure probes for Gateway and Web Service.

In your Workspace, click on 'Terminal' → 'Run Task…' → 'devfile: Probes - Configure Gateway & Web'

Monitoring Applications Metrics

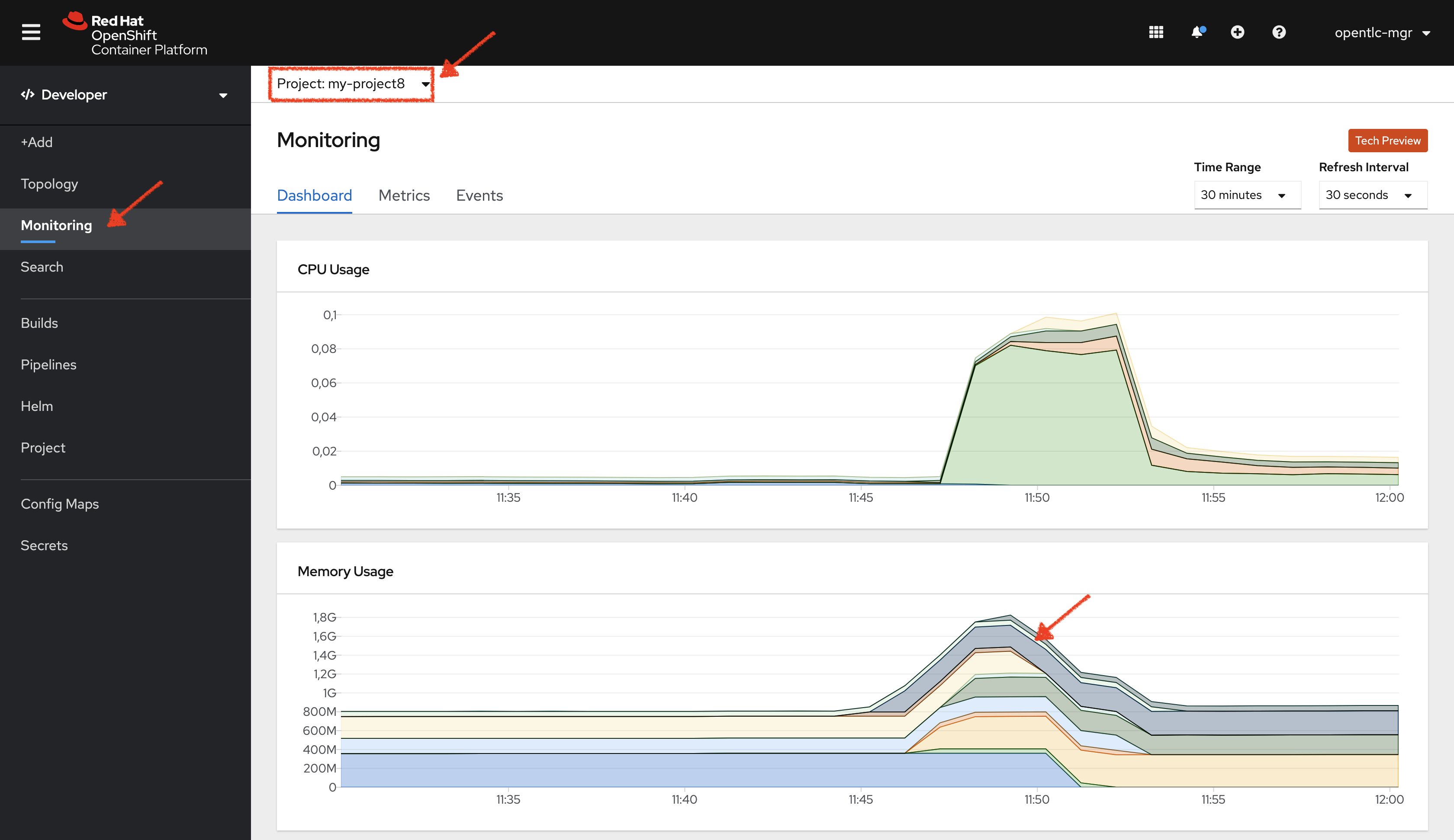

Metrics are another important aspect of monitoring applications which is required in order to gain visibility into how the application behaves and particularly in identifying issues.

OpenShift provides container metrics out-of-the-box and displays how much memory, cpu and network each container has been consuming over time.

In the OpenShift Web Console, from the Developer view,

click on 'Observe' then select your 'my-project%USER_ID%' project.

In the project overview, you can see the different Resource Usage sections.

click on one graph to get more details.

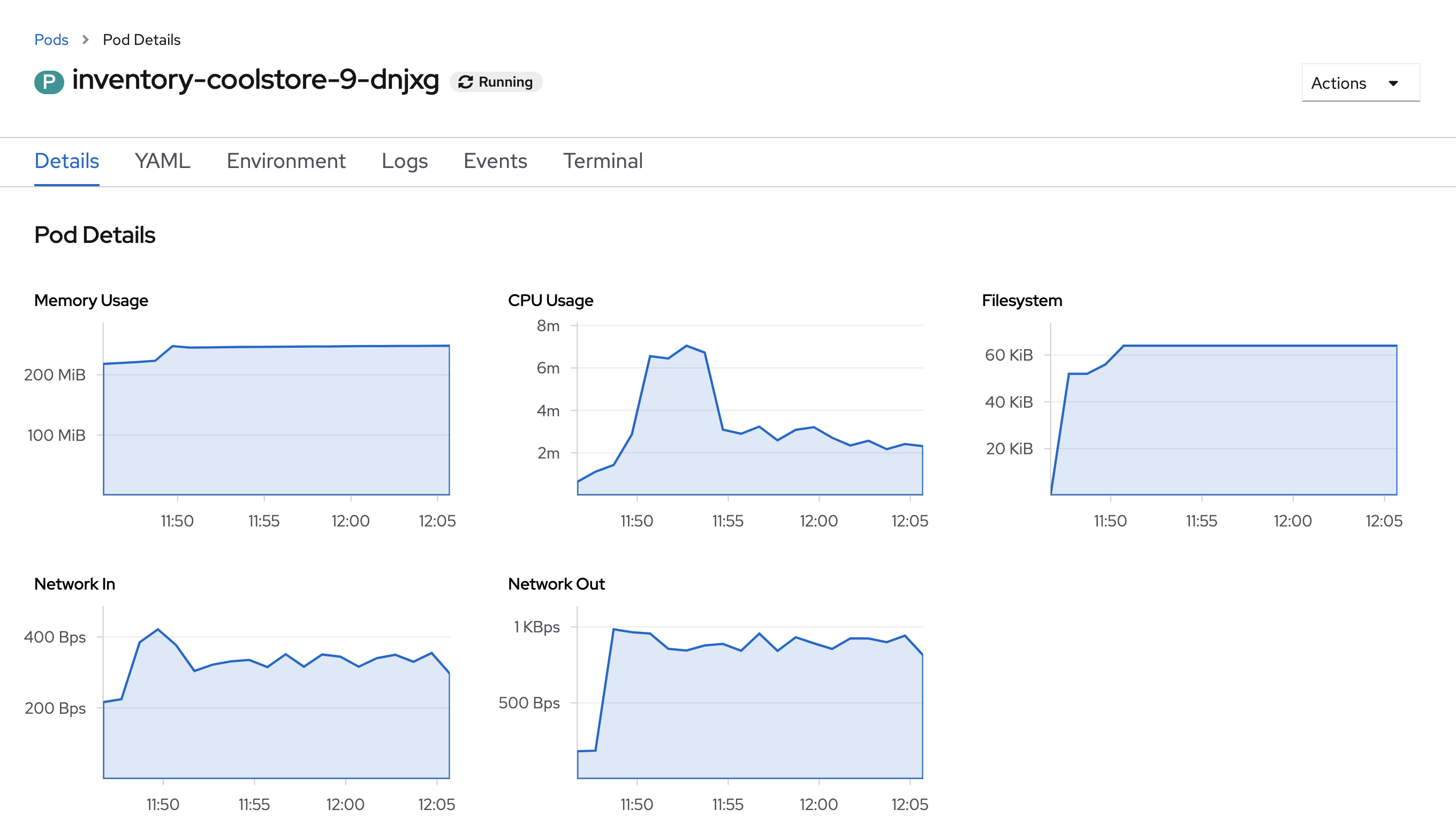

From the Developer view, click on 'Topology' → any Deployment (D) and click on the associated Pod (P)

In the pod overview, you can see a more detailed view of the pod consumption. The graphs can be found under the Metrics heading, or Details in earlier versions of the OpenShift console.

Well done! You are ready to move on to the next lab.